Hi~ pt

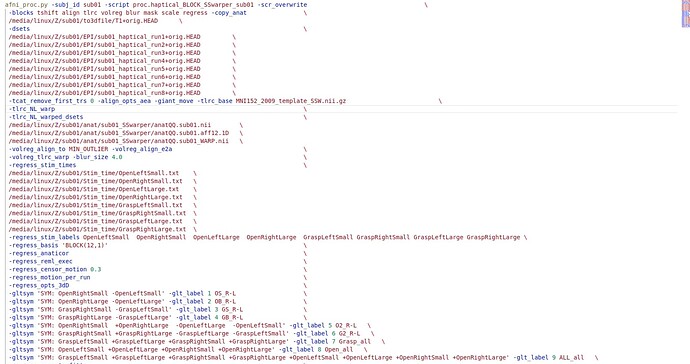

Here is our afni_proc.py

#!/bin/tcsh -xef

echo "auto-generated by afni_proc.py, Thu Aug 22 18:33:31 2024"

echo "(version 7.60, August 21, 2023)"

echo "execution started: `date`"

# to execute via tcsh:

# tcsh -xef proc.haptical_BLOCK_SSwarper_sub01 |& tee output.proc.haptical_BLOCK_SSwarper_sub01

# to execute via bash:

# tcsh -xef proc.haptical_BLOCK_SSwarper_sub01 2>&1 | tee output.proc.haptical_BLOCK_SSwarper_sub01

# =========================== auto block: setup ============================

# script setup

# take note of the AFNI version

afni -ver

# check that the current AFNI version is recent enough

afni_history -check_date 14 Nov 2022

if ( $status ) then

echo "** this script requires newer AFNI binaries (than 14 Nov 2022)"

echo " (consider: @update.afni.binaries -defaults)"

exit

endif

# the user may specify a single subject to run with

if ( $#argv > 0 ) then

set subj = $argv[1]

else

set subj = sub01

endif

# assign output directory name

set output_dir = $subj.results

# verify that the results directory does not yet exist

if ( -d $output_dir ) then

echo output dir "$subj.results" already exists

exit

endif

# set list of runs

set runs = (`count -digits 2 1 8`)

# create results and stimuli directories

mkdir -p $output_dir

mkdir $output_dir/stimuli

# copy stim files into stimulus directory

cp /media/linux/Z/sub01/Stim_time/OpenLeftSmall.txt \

/media/linux/Z/sub01/Stim_time/OpenRightSmall.txt \

/media/linux/Z/sub01/Stim_time/OpenLeftLarge.txt \

/media/linux/Z/sub01/Stim_time/OpenRightLarge.txt \

/media/linux/Z/sub01/Stim_time/GraspLeftSmall.txt \

/media/linux/Z/sub01/Stim_time/GraspRightSmall.txt \

/media/linux/Z/sub01/Stim_time/GraspLeftLarge.txt \

/media/linux/Z/sub01/Stim_time/GraspRightLarge.txt $output_dir/stimuli

# copy anatomy to results dir

3dcopy /media/linux/Z/sub01/to3dfile/T1+orig $output_dir/T1

# copy template to results dir (for QC)

3dcopy /home/linux/abin/MNI152_2009_template_SSW.nii.gz \

$output_dir/MNI152_2009_template_SSW.nii.gz

# copy external -tlrc_NL_warped_dsets datasets

3dcopy /media/linux/Z/sub01/anat/sub01_SSwarper/anatQQ.sub01.nii \

$output_dir/anatQQ.sub01

3dcopy /media/linux/Z/sub01/anat/sub01_SSwarper/anatQQ.sub01.aff12.1D \

$output_dir/anatQQ.sub01.aff12.1D

3dcopy /media/linux/Z/sub01/anat/sub01_SSwarper/anatQQ.sub01_WARP.nii \

$output_dir/anatQQ.sub01_WARP.nii

# ============================ auto block: tcat ============================

# apply 3dTcat to copy input dsets to results dir,

# while removing the first 0 TRs

3dTcat -prefix $output_dir/pb00.$subj.r01.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run1+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r02.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run2+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r03.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run3+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r04.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run4+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r05.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run5+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r06.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run6+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r07.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run7+orig'[0..$]'

3dTcat -prefix $output_dir/pb00.$subj.r08.tcat \

/media/linux/Z/sub01/EPI/sub01_haptical_run8+orig'[0..$]'

# and make note of repetitions (TRs) per run

set tr_counts = ( 198 198 198 198 198 198 198 198 )

# -------------------------------------------------------

# enter the results directory (can begin processing data)

cd $output_dir

# ---------------------------------------------------------

# QC: look for columns of high variance

find_variance_lines.tcsh -polort 3 -nerode 2 \

-rdir vlines.pb00.tcat \

pb00.$subj.r*.tcat+orig.HEAD |& tee out.vlines.pb00.tcat.txt

# ========================== auto block: outcount ==========================

# QC: compute outlier fraction for each volume

touch out.pre_ss_warn.txt

foreach run ( $runs )

3dToutcount -automask -fraction -polort 3 -legendre \

pb00.$subj.r$run.tcat+orig > outcount.r$run.1D

# outliers at TR 0 might suggest pre-steady state TRs

if ( `1deval -a outcount.r$run.1D"{0}" -expr "step(a-0.4)"` ) then

echo "** TR #0 outliers: possible pre-steady state TRs in run $run" \

>> out.pre_ss_warn.txt

endif

end

# catenate outlier counts into a single time series

cat outcount.r*.1D > outcount_rall.1D

# get run number and TR index for minimum outlier volume

set minindex = `3dTstat -argmin -prefix - outcount_rall.1D\'`

set ovals = ( `1d_tool.py -set_run_lengths $tr_counts \

-index_to_run_tr $minindex` )

# save run and TR indices for extraction of vr_base_min_outlier

set minoutrun = $ovals[1]

set minouttr = $ovals[2]

echo "min outlier: run $minoutrun, TR $minouttr" | tee out.min_outlier.txt

# ================================= tshift =================================

# time shift data so all slice timing is the same

foreach run ( $runs )

3dTshift -tzero 0 -quintic -prefix pb01.$subj.r$run.tshift \

pb00.$subj.r$run.tcat+orig

end

# --------------------------------

# extract volreg registration base

3dbucket -prefix vr_base_min_outlier \

pb01.$subj.r$minoutrun.tshift+orig"[$minouttr]"

# ================================= align ==================================

# for e2a: compute anat alignment transformation to EPI registration base

# (new anat will be intermediate, stripped, T1_ns+orig)

align_epi_anat.py -anat2epi -anat T1+orig \

-save_skullstrip -suffix _al_junk \

-epi vr_base_min_outlier+orig -epi_base 0 \

-epi_strip 3dAutomask \

-giant_move \

-volreg off -tshift off

# ================================== tlrc ==================================

# nothing to do: have external -tlrc_NL_warped_dsets

# warped anat : anatQQ.sub01+tlrc

# affine xform : anatQQ.sub01.aff12.1D

# non-linear warp : anatQQ.sub01_WARP.nii

# ================================= volreg =================================

# align each dset to base volume, to anat, warp to tlrc space

# verify that we have a +tlrc warp dataset

if ( ! -f anatQQ.sub01+tlrc.HEAD ) then

echo "** missing +tlrc warp dataset: anatQQ.sub01+tlrc.HEAD"

exit

endif

# register and warp

foreach run ( $runs )

# register each volume to the base image

3dvolreg -verbose -zpad 1 -base vr_base_min_outlier+orig \

-1Dfile dfile.r$run.1D -prefix rm.epi.volreg.r$run \

-cubic \

-1Dmatrix_save mat.r$run.vr.aff12.1D \

pb01.$subj.r$run.tshift+orig

# create an all-1 dataset to mask the extents of the warp

3dcalc -overwrite -a pb01.$subj.r$run.tshift+orig -expr 1 \

-prefix rm.epi.all1

# catenate volreg/epi2anat/tlrc xforms

cat_matvec -ONELINE \

anatQQ.sub01.aff12.1D \

T1_al_junk_mat.aff12.1D -I \

mat.r$run.vr.aff12.1D > mat.r$run.warp.aff12.1D

# apply catenated xform: volreg/epi2anat/tlrc/NLtlrc

# then apply non-linear standard-space warp

3dNwarpApply -master anatQQ.sub01+tlrc -dxyz 2 \

-source pb01.$subj.r$run.tshift+orig \

-nwarp "anatQQ.sub01_WARP.nii mat.r$run.warp.aff12.1D" \

-prefix rm.epi.nomask.r$run

# warp the all-1 dataset for extents masking

3dNwarpApply -master anatQQ.sub01+tlrc -dxyz 2 \

-source rm.epi.all1+orig \

-nwarp "anatQQ.sub01_WARP.nii mat.r$run.warp.aff12.1D" \

-interp cubic \

-ainterp NN -quiet \

-prefix rm.epi.1.r$run

# make an extents intersection mask of this run

3dTstat -min -prefix rm.epi.min.r$run rm.epi.1.r$run+tlrc

end

# make a single file of registration params

cat dfile.r*.1D > dfile_rall.1D

# ----------------------------------------

# create the extents mask: mask_epi_extents+tlrc

# (this is a mask of voxels that have valid data at every TR)

3dMean -datum short -prefix rm.epi.mean rm.epi.min.r*.HEAD

3dcalc -a rm.epi.mean+tlrc -expr 'step(a-0.999)' -prefix mask_epi_extents

# and apply the extents mask to the EPI data

# (delete any time series with missing data)

foreach run ( $runs )

3dcalc -a rm.epi.nomask.r$run+tlrc -b mask_epi_extents+tlrc \

-expr 'a*b' -prefix pb02.$subj.r$run.volreg

end

# warp the volreg base EPI dataset to make a final version

cat_matvec -ONELINE \

anatQQ.sub01.aff12.1D \

T1_al_junk_mat.aff12.1D -I > mat.basewarp.aff12.1D

3dNwarpApply -master anatQQ.sub01+tlrc -dxyz 2 \

-source vr_base_min_outlier+orig \

-nwarp "anatQQ.sub01_WARP.nii mat.basewarp.aff12.1D" \

-prefix final_epi_vr_base_min_outlier

# create an anat_final dataset, aligned with stats

3dcopy anatQQ.sub01+tlrc anat_final.$subj

# record final registration costs

3dAllineate -base final_epi_vr_base_min_outlier+tlrc -allcostX \

-input anat_final.$subj+tlrc |& tee out.allcostX.txt

# -----------------------------------------

# warp anat follower datasets (non-linear)

# warp follower dataset T1+orig

3dNwarpApply -source T1+orig \

-master anat_final.$subj+tlrc \

-ainterp wsinc5 -nwarp anatQQ.sub01_WARP.nii \

anatQQ.sub01.aff12.1D \

-prefix anat_w_skull_warped

# ================================== blur ==================================

# blur each volume of each run

foreach run ( $runs )

3dmerge -1blur_fwhm 4.0 -doall -prefix pb03.$subj.r$run.blur \

pb02.$subj.r$run.volreg+tlrc

end

# ================================== mask ==================================

# create 'full_mask' dataset (union mask)

foreach run ( $runs )

3dAutomask -prefix rm.mask_r$run pb03.$subj.r$run.blur+tlrc

end

# create union of inputs, output type is byte

3dmask_tool -inputs rm.mask_r*+tlrc.HEAD -union -prefix full_mask.$subj

# ---- create subject anatomy mask, mask_anat.$subj+tlrc ----

# (resampled from tlrc anat)

3dresample -master full_mask.$subj+tlrc -input anatQQ.sub01+tlrc \

-prefix rm.resam.anat

# convert to binary anat mask; fill gaps and holes

3dmask_tool -dilate_input 5 -5 -fill_holes -input rm.resam.anat+tlrc \

-prefix mask_anat.$subj

# compute tighter EPI mask by intersecting with anat mask

3dmask_tool -input full_mask.$subj+tlrc mask_anat.$subj+tlrc \

-inter -prefix mask_epi_anat.$subj

# compute overlaps between anat and EPI masks

3dABoverlap -no_automask full_mask.$subj+tlrc mask_anat.$subj+tlrc \

|& tee out.mask_ae_overlap.txt

# note Dice coefficient of masks, as well

3ddot -dodice full_mask.$subj+tlrc mask_anat.$subj+tlrc \

|& tee out.mask_ae_dice.txt

# ---- create group anatomy mask, mask_group+tlrc ----

# (resampled from tlrc base anat, MNI152_2009_template_SSW.nii.gz)

3dresample -master full_mask.$subj+tlrc -prefix ./rm.resam.group \

-input /home/linux/abin/MNI152_2009_template_SSW.nii.gz'[0]'

# convert to binary group mask; fill gaps and holes

3dmask_tool -dilate_input 5 -5 -fill_holes -input rm.resam.group+tlrc \

-prefix mask_group

# note Dice coefficient of anat and template masks

3ddot -dodice mask_anat.$subj+tlrc mask_group+tlrc \

|& tee out.mask_at_dice.txt

# ---- segment anatomy into classes CSF/GM/WM ----

3dSeg -anat anat_final.$subj+tlrc -mask AUTO -classes 'CSF ; GM ; WM'

# copy resulting Classes dataset to current directory

3dcopy Segsy/Classes+tlrc .

# make individual ROI masks for regression (CSF GM WM and CSFe GMe WMe)

foreach class ( CSF GM WM )

# unitize and resample individual class mask from composite

3dmask_tool -input Segsy/Classes+tlrc"<$class>" \

-prefix rm.mask_${class}

3dresample -master pb03.$subj.r01.blur+tlrc -rmode NN \

-input rm.mask_${class}+tlrc -prefix mask_${class}_resam

# also, generate eroded masks

3dmask_tool -input Segsy/Classes+tlrc"<$class>" -dilate_input -1 \

-prefix rm.mask_${class}e

3dresample -master pb03.$subj.r01.blur+tlrc -rmode NN \

-input rm.mask_${class}e+tlrc -prefix mask_${class}e_resam

end

# ================================= scale ==================================

# scale each voxel time series to have a mean of 100

# (be sure no negatives creep in)

# (subject to a range of [0,200])

foreach run ( $runs )

3dTstat -prefix rm.mean_r$run pb03.$subj.r$run.blur+tlrc

3dcalc -a pb03.$subj.r$run.blur+tlrc -b rm.mean_r$run+tlrc \

-c mask_epi_extents+tlrc \

-expr 'c * min(200, a/b*100)*step(a)*step(b)' \

-prefix pb04.$subj.r$run.scale

end

# ================================ regress =================================

# compute de-meaned motion parameters (for use in regression)

1d_tool.py -infile dfile_rall.1D -set_nruns 8 \

-demean -write motion_demean.1D

# compute motion parameter derivatives (just to have)

1d_tool.py -infile dfile_rall.1D -set_nruns 8 \

-derivative -demean -write motion_deriv.1D

# convert motion parameters for per-run regression

1d_tool.py -infile motion_demean.1D -set_nruns 8 \

-split_into_pad_runs mot_demean

# create censor file motion_${subj}_censor.1D, for censoring motion

1d_tool.py -infile dfile_rall.1D -set_nruns 8 \

-show_censor_count -censor_prev_TR \

-censor_motion 0.3 motion_${subj}

# note TRs that were not censored

# (apply from a text file, in case of a lot of censoring)

1d_tool.py -infile motion_${subj}_censor.1D \

-show_trs_uncensored space \

> out.keep_trs_rall.txt

set ktrs = "1dcat out.keep_trs_rall.txt"

# ------------------------------

# run the regression analysis

3dDeconvolve -input pb04.$subj.r*.scale+tlrc.HEAD \

-censor motion_${subj}_censor.1D \

-ortvec mot_demean.r01.1D mot_demean_r01 \

-ortvec mot_demean.r02.1D mot_demean_r02 \

-ortvec mot_demean.r03.1D mot_demean_r03 \

-ortvec mot_demean.r04.1D mot_demean_r04 \

-ortvec mot_demean.r05.1D mot_demean_r05 \

-ortvec mot_demean.r06.1D mot_demean_r06 \

-ortvec mot_demean.r07.1D mot_demean_r07 \

-ortvec mot_demean.r08.1D mot_demean_r08 \

-polort 3 \

-GOFORIT 18 \

-num_stimts 8 \

-stim_times_IM 1 stimuli/OpenLeftSmall.txt 'BLOCK(12,1)' \

-stim_label 1 OpenLeftSmall \

-stim_times_IM 2 stimuli/OpenRightSmall.txt 'BLOCK(12,1)' \

-stim_label 2 OpenRightSmall \

-stim_times_IM 3 stimuli/OpenLeftLarge.txt 'BLOCK(12,1)' \

-stim_label 3 OpenLeftLarge \

-stim_times_IM 4 stimuli/OpenRightLarge.txt 'BLOCK(12,1)' \

-stim_label 4 OpenRightLarge \

-stim_times_IM 5 stimuli/GraspLeftSmall.txt 'BLOCK(12,1)' \

-stim_label 5 GraspLeftSmall \

-stim_times_IM 6 stimuli/GraspRightSmall.txt 'BLOCK(12,1)' \

-stim_label 6 GraspRightSmall \

-stim_times_IM 7 stimuli/GraspLeftLarge.txt 'BLOCK(12,1)' \

-stim_label 7 GraspLeftLarge \

-stim_times_IM 8 stimuli/GraspRightLarge.txt 'BLOCK(12,1)' \

-stim_label 8 GraspRightLarge \

-gltsym 'SYM: OpenRightSmall -OpenLeftSmall' \

-glt_label 1 OS_R-L \

-gltsym 'SYM: OpenRightLarge -OpenLeftLarge' \

-glt_label 2 OB_R-L \

-gltsym 'SYM: GraspRightSmall -GraspLeftSmall' \

-glt_label 3 GS_R-L \

-gltsym 'SYM: GraspRightLarge -GraspLeftLarge' \

-glt_label 4 GB_R-L \

-gltsym 'SYM: OpenRightSmall +OpenRightLarge -OpenLeftLarge \

-OpenLeftSmall' \

-glt_label 5 O2_R-L \

-gltsym 'SYM: GraspRightSmall +GraspRightLarge -GraspLeftLarge \

-GraspLeftSmall' \

-glt_label 6 G2_R-L \

-gltsym 'SYM: GraspLeftSmall +GraspLeftLarge +GraspRightSmall \

+GraspRightLarge' \

-glt_label 7 Grasp_all \

-gltsym 'SYM: OpenLeftSmall +OpenLeftLarge +OpenRightSmall \

+OpenRightLarge' \

-glt_label 8 Open_all \

-gltsym 'SYM: GraspLeftSmall +GraspLeftLarge +GraspRightSmall \

+GraspRightLarge +OpenLeftSmall +OpenLeftLarge +OpenRightSmall \

+OpenRightLarge' \

-glt_label 9 ALL_all \

-fout -tout -x1D X.xmat.1D -xjpeg X.jpg \

-x1D_uncensored X.nocensor.xmat.1D \

-errts errts.${subj} \

-bucket stats.$subj

# if 3dDeconvolve fails, terminate the script

if ( $status != 0 ) then

echo '---------------------------------------'

echo '** 3dDeconvolve error, failing...'

echo ' (consider the file 3dDeconvolve.err)'

exit

endif

# display any large pairwise correlations from the X-matrix

1d_tool.py -show_cormat_warnings -infile X.xmat.1D |& tee out.cormat_warn.txt

# display degrees of freedom info from X-matrix

1d_tool.py -show_df_info -infile X.xmat.1D |& tee out.df_info.txt

# --------------------------------------------------

# ANATICOR: generate local WMe time series averages

# create catenated volreg dataset

3dTcat -prefix rm.all_runs.volreg pb02.$subj.r*.volreg+tlrc.HEAD

3dLocalstat -stat mean -nbhd 'SPHERE(30)' -prefix Local_WMe_rall \

-mask mask_WMe_resam+tlrc -use_nonmask \

rm.all_runs.volreg+tlrc

# -- execute the 3dREMLfit script, written by 3dDeconvolve --

# (include ANATICOR regressors via -dsort)

tcsh -x stats.REML_cmd -dsort Local_WMe_rall+tlrc

# if 3dREMLfit fails, terminate the script

if ( $status != 0 ) then

echo '---------------------------------------'

echo '** 3dREMLfit error, failing...'

exit

endif

# create an all_runs dataset to match the fitts, errts, etc.

3dTcat -prefix all_runs.$subj pb04.$subj.r*.scale+tlrc.HEAD

# --------------------------------------------------

# create a temporal signal to noise ratio dataset

# signal: if 'scale' block, mean should be 100

# noise : compute standard deviation of errts

3dTstat -mean -prefix rm.signal.all all_runs.$subj+tlrc"[$ktrs]"

3dTstat -stdev -prefix rm.noise.all errts.${subj}_REML+tlrc"[$ktrs]"

3dcalc -a rm.signal.all+tlrc \

-b rm.noise.all+tlrc \

-expr 'a/b' -prefix TSNR.$subj

# ---------------------------------------------------

# compute and store GCOR (global correlation average)

# (sum of squares of global mean of unit errts)

3dTnorm -norm2 -prefix rm.errts.unit errts.${subj}_REML+tlrc

3dmaskave -quiet -mask full_mask.$subj+tlrc rm.errts.unit+tlrc \

> mean.errts.unit.1D

3dTstat -sos -prefix - mean.errts.unit.1D\' > out.gcor.1D

echo "-- GCOR = `cat out.gcor.1D`"

# ---------------------------------------------------

# compute correlation volume

# (per voxel: correlation with masked brain average)

3dmaskave -quiet -mask full_mask.$subj+tlrc errts.${subj}_REML+tlrc \

> mean.errts.1D

3dTcorr1D -prefix corr_brain errts.${subj}_REML+tlrc mean.errts.1D

# create fitts dataset from all_runs and errts

3dcalc -a all_runs.$subj+tlrc -b errts.${subj}+tlrc -expr a-b \

-prefix fitts.$subj

# create fitts from REML errts

3dcalc -a all_runs.$subj+tlrc -b errts.${subj}_REML+tlrc -expr a-b \

-prefix fitts.$subj\_REML

# create ideal files for fixed response stim types

1dcat X.nocensor.xmat.1D'[32]' > ideal_OpenLeftSmall.1D

1dcat X.nocensor.xmat.1D'[33]' > ideal_OpenRightSmall.1D

1dcat X.nocensor.xmat.1D'[34]' > ideal_OpenLeftLarge.1D

1dcat X.nocensor.xmat.1D'[35]' > ideal_OpenRightLarge.1D

1dcat X.nocensor.xmat.1D'[36]' > ideal_GraspLeftSmall.1D

1dcat X.nocensor.xmat.1D'[37]' > ideal_GraspRightSmall.1D

1dcat X.nocensor.xmat.1D'[38]' > ideal_GraspLeftLarge.1D

1dcat X.nocensor.xmat.1D'[39]' > ideal_GraspRightLarge.1D

# --------------------------------------------------

# extract non-baseline regressors from the X-matrix,

# then compute their sum

1d_tool.py -infile X.nocensor.xmat.1D -write_xstim X.stim.xmat.1D

3dTstat -sum -prefix sum_ideal.1D X.stim.xmat.1D

# ============================ blur estimation =============================

# compute blur estimates

touch blur_est.$subj.1D # start with empty file

# create directory for ACF curve files

mkdir files_ACF

# -- estimate blur for each run in epits --

touch blur.epits.1D

# restrict to uncensored TRs, per run

foreach run ( $runs )

set trs = `1d_tool.py -infile X.xmat.1D -show_trs_uncensored encoded \

-show_trs_run $run`

if ( $trs == "" ) continue

3dFWHMx -detrend -mask full_mask.$subj+tlrc \

-ACF files_ACF/out.3dFWHMx.ACF.epits.r$run.1D \

all_runs.$subj+tlrc"[$trs]" >> blur.epits.1D

end

# compute average FWHM blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.epits.1D'{0..$(2)}'\'` )

echo average epits FWHM blurs: $blurs

echo "$blurs # epits FWHM blur estimates" >> blur_est.$subj.1D

# compute average ACF blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.epits.1D'{1..$(2)}'\'` )

echo average epits ACF blurs: $blurs

echo "$blurs # epits ACF blur estimates" >> blur_est.$subj.1D

# -- estimate blur for each run in errts --

touch blur.errts.1D

# restrict to uncensored TRs, per run

foreach run ( $runs )

set trs = `1d_tool.py -infile X.xmat.1D -show_trs_uncensored encoded \

-show_trs_run $run`

if ( $trs == "" ) continue

3dFWHMx -detrend -mask full_mask.$subj+tlrc \

-ACF files_ACF/out.3dFWHMx.ACF.errts.r$run.1D \

errts.${subj}+tlrc"[$trs]" >> blur.errts.1D

end

# compute average FWHM blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.errts.1D'{0..$(2)}'\'` )

echo average errts FWHM blurs: $blurs

echo "$blurs # errts FWHM blur estimates" >> blur_est.$subj.1D

# compute average ACF blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.errts.1D'{1..$(2)}'\'` )

echo average errts ACF blurs: $blurs

echo "$blurs # errts ACF blur estimates" >> blur_est.$subj.1D

# -- estimate blur for each run in err_reml --

touch blur.err_reml.1D

# restrict to uncensored TRs, per run

foreach run ( $runs )

set trs = `1d_tool.py -infile X.xmat.1D -show_trs_uncensored encoded \

-show_trs_run $run`

if ( $trs == "" ) continue

3dFWHMx -detrend -mask full_mask.$subj+tlrc \

-ACF files_ACF/out.3dFWHMx.ACF.err_reml.r$run.1D \

errts.${subj}_REML+tlrc"[$trs]" >> blur.err_reml.1D

end

# compute average FWHM blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.err_reml.1D'{0..$(2)}'\'` )

echo average err_reml FWHM blurs: $blurs

echo "$blurs # err_reml FWHM blur estimates" >> blur_est.$subj.1D

# compute average ACF blur (from every other row) and append

set blurs = ( `3dTstat -mean -prefix - blur.err_reml.1D'{1..$(2)}'\'` )

echo average err_reml ACF blurs: $blurs

echo "$blurs # err_reml ACF blur estimates" >> blur_est.$subj.1D

# add 3dClustSim results as attributes to any stats dset

mkdir files_ClustSim

# run Monte Carlo simulations using method 'ACF'

set params = ( `grep ACF blur_est.$subj.1D | tail -n 1` )

3dClustSim -both -mask full_mask.$subj+tlrc -acf $params[1-3] \

-cmd 3dClustSim.ACF.cmd -prefix files_ClustSim/ClustSim.ACF

# run 3drefit to attach 3dClustSim results to stats

set cmd = ( `cat 3dClustSim.ACF.cmd` )

$cmd stats.$subj+tlrc stats.${subj}_REML+tlrc

# ---------------------------------------------------------

# QC: compute correlations with spherical ~averages

@radial_correlate -nfirst 0 -polort 3 -do_clean yes \

-rdir radcor.pb05.regress \

all_runs.$subj+tlrc.HEAD errts.${subj}_REML+tlrc.HEAD

# ========================= auto block: QC_review ==========================

# generate quality control review scripts and HTML report

# generate a review script for the unprocessed EPI data

gen_epi_review.py -script @epi_review.$subj \

-dsets pb00.$subj.r*.tcat+orig.HEAD

# -------------------------------------------------

# generate scripts to review single subject results

# (try with defaults, but do not allow bad exit status)

# write AP uvars into a simple txt file

cat << EOF > out.ap_uvars.txt

mot_limit : 0.3

copy_anat : T1+orig.HEAD

mask_dset : full_mask.$subj+tlrc.HEAD

template : MNI152_2009_template_SSW.nii.gz

ss_review_dset : out.ss_review.$subj.txt

vlines_tcat_dir : vlines.pb00.tcat

EOF

# and convert the txt format to JSON

cat out.ap_uvars.txt | afni_python_wrapper.py -eval "data_file_to_json()" \

> out.ap_uvars.json

# initialize gen_ss_review_scripts.py with out.ap_uvars.json

gen_ss_review_scripts.py -exit0 \

-init_uvars_json out.ap_uvars.json \

-write_uvars_json out.ss_review_uvars.json

# ========================== auto block: finalize ==========================

# remove temporary files

\rm -fr rm.* Segsy

# if the basic subject review script is here, run it

# (want this to be the last text output)

if ( -e @ss_review_basic ) then

./@ss_review_basic |& tee out.ss_review.$subj.txt

# generate html ss review pages

# (akin to static images from running @ss_review_driver)

apqc_make_tcsh.py -review_style pythonic -subj_dir . \

-uvar_json out.ss_review_uvars.json

apqc_make_html.py -qc_dir QC_$subj

echo "\nconsider running: \n"

echo " afni_open -b $subj.results/QC_$subj/index.html"

echo ""

endif

# return to parent directory (just in case...)

cd ..

echo "execution finished: `date`"

An interesting thing happened, for another subject collected on the same day-sub02, the same script was able to be run in complete. So we really wanna understand how to solve this problem.

Thanks,

Qianqian Wu