Greetings, venerable AFNIstas-

I’m new to the 3dMVM game so please feel free to direct me to a previous thread if you’ve already answered this. Just want to make sure that our coding matches our questions.

We have two quantitative variables per subject: 1) CSF concentration of the dopamine metabolite homovanillic acid (HVA); and 2) age, which tends to be weakly but significantly correlated with HVA. Within a single group, we’d like to look at the following in relation to some rsfMRI indices:

- HVA, with the relation between age and the rsfMRI DV accounted for

- age, with the relation between HVA and the rsfMRI DV accounted for

It seems that this could be handled by the following:

3dMVM \

-prefix Age_HVA \

-bsVars "Age+HVA" \

-qVars "Age,HVA" \

-jobs 4 \

-dataTable \

Subj Age HVA InputFile \

AdP-003-1 54 211 /halfpipe/sub-MDD003/func/task-rest/sub-MDD003_task-rest_feature-dualReg_map-FINDIca_component-12_stat-effect_statmap.nii.gz \

...

Aye or nay?

And, a follow-up question:

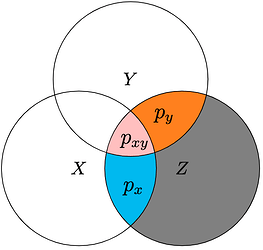

What if I’m interested not in how HVA and age might uniquely account for variability in the rsfMRI data but the complement to the that: how the shared variance in age and HVA could relate to the imaging indices? Probably most efficient to handle this first on the side of the two predictor variables–i.e., calculate each subject’s projection onto the first principal component of age and HVA and then use that as a qvar in 3dMVM.

Sorry. I feel like this is turning into stats therapy more than AFNI advice. Feel free to direct me to other resources you’ve either developed or found useful.